A modular and interactive physical representation of a neural network.

Collaborator: Michael You, Electrical and Computer Engineering 2020

Built at HackPrinceton Spring 2018 (36 hours)

Top 10 Grand Prize Finalist

1517 Fund Prize Winner

My Role

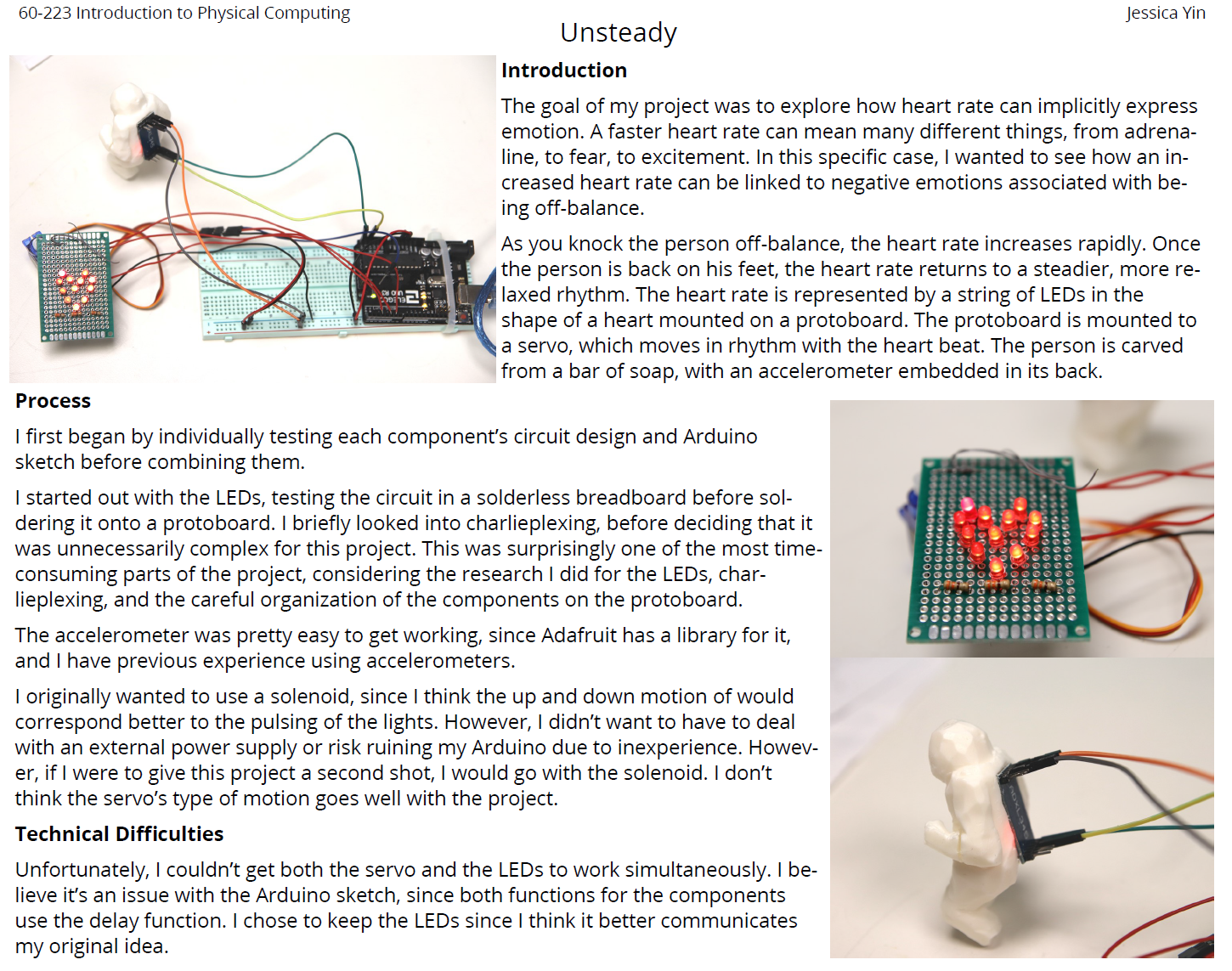

Collaborative ideation

CAD design

Rapid prototyping

User interface design

Laser cutting

Assembly

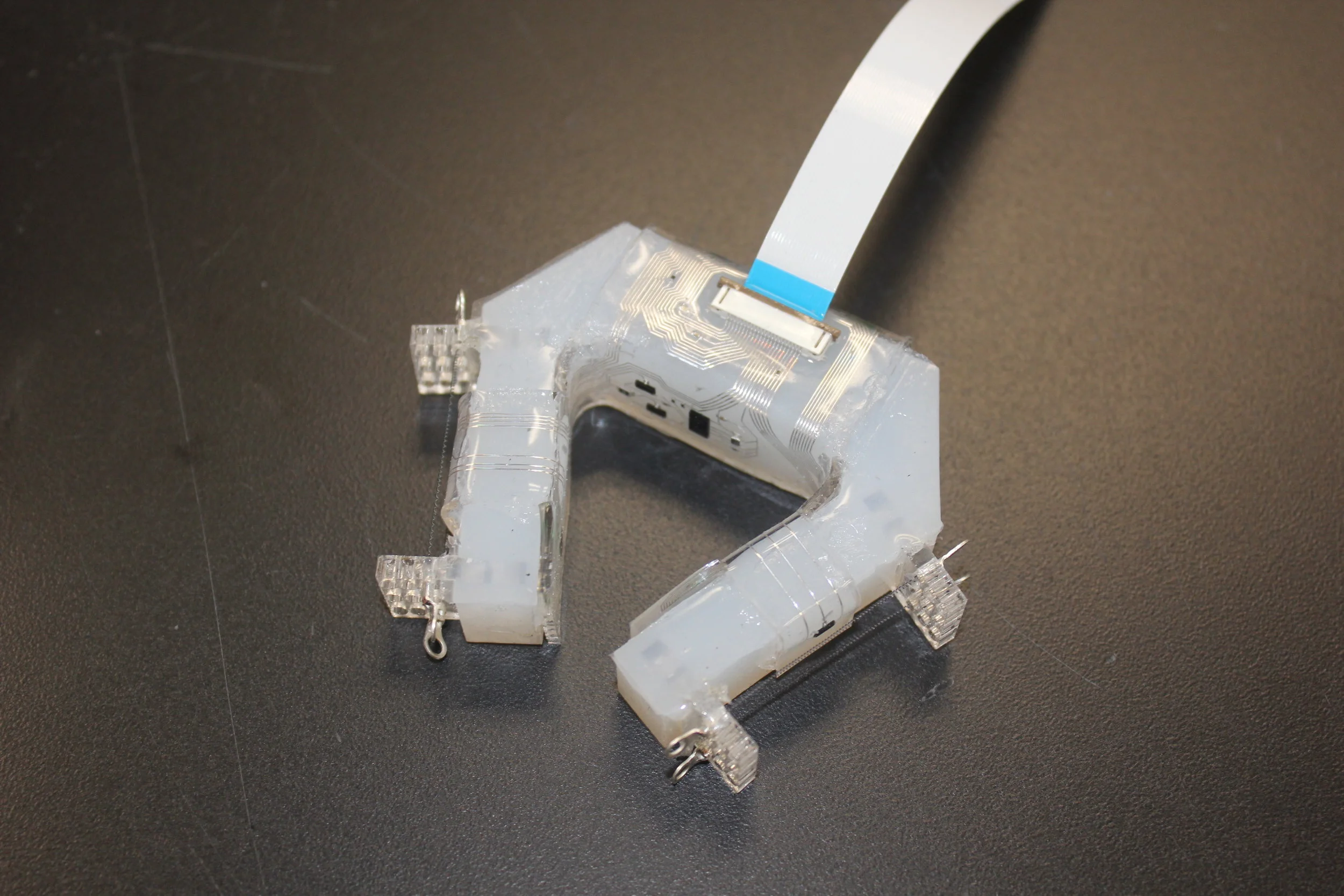

Pogo pin integration

Soldering

Hardware supplies logistics & planning

Pitch presentation

Inspiration

We were inspired by Tensorflow Playground (playground.tensorflow.org), which offers a visual, interactive way for beginners and experts alike to learn how the inner workings of how neural networks function.

Description

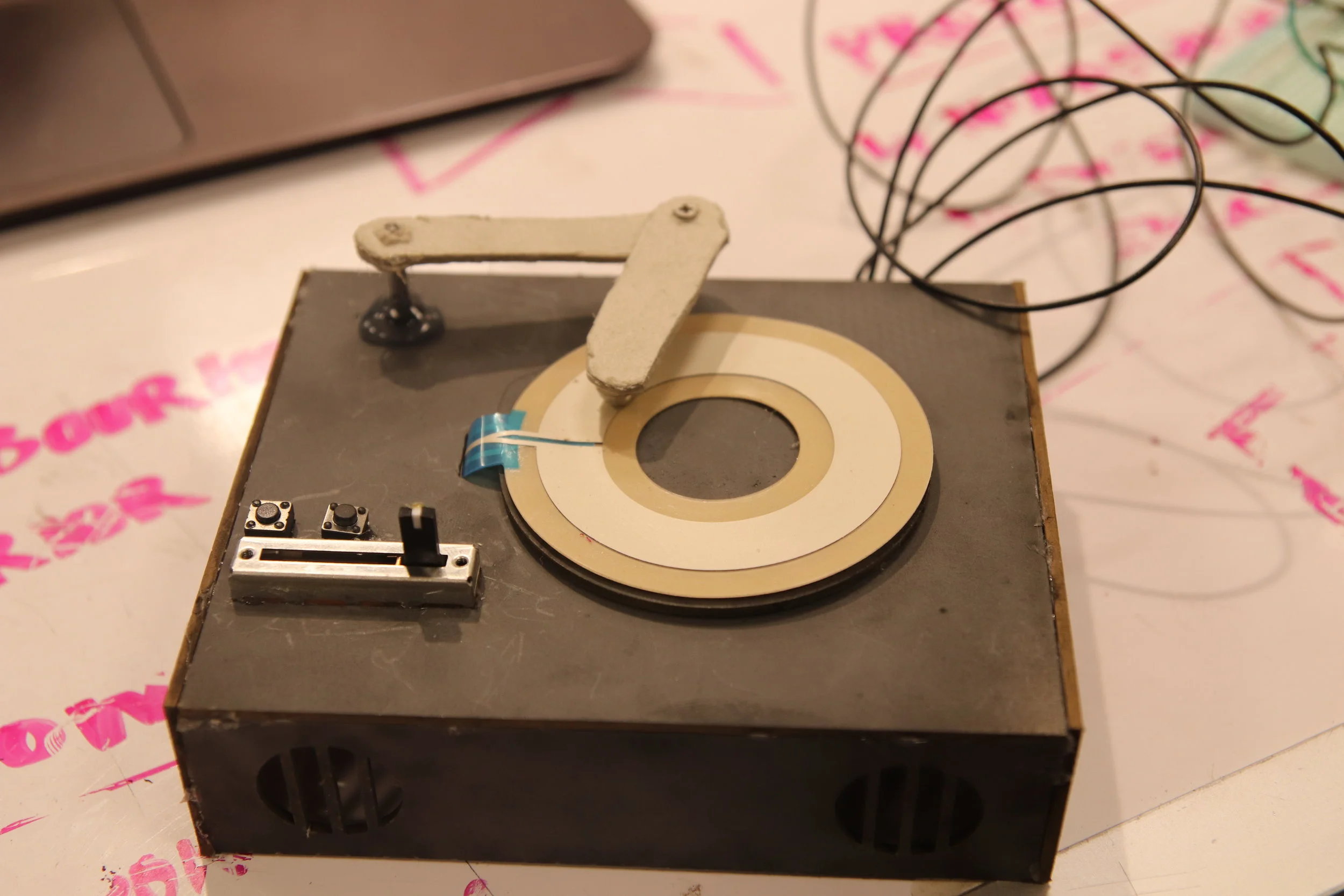

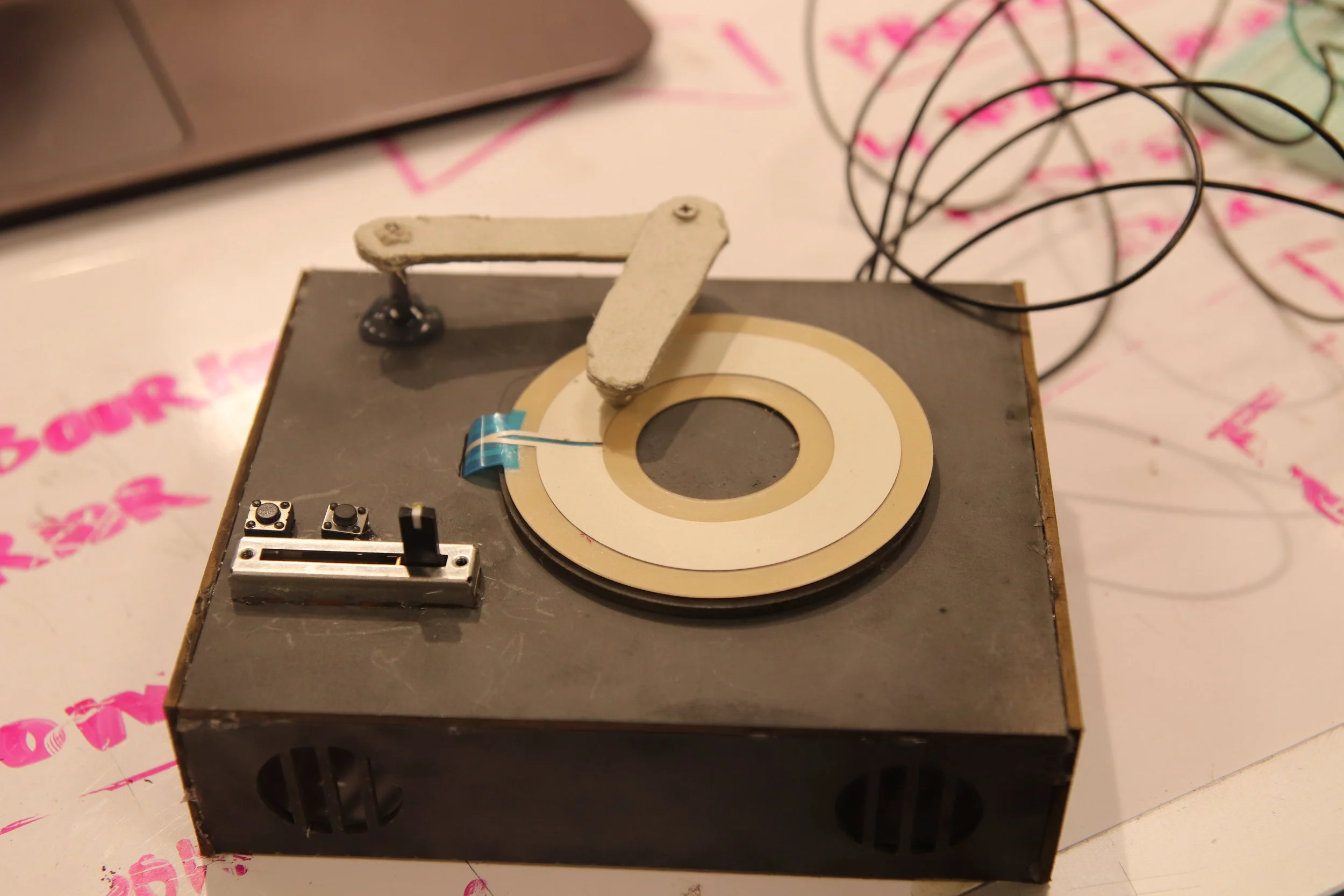

With neural networks as one of the premier models for machine learning, it's no surprise that almost everyone is interested in learning about the model, and figuring out how to apply it to projects. However, for those without a background in computer science, it can be an intimidating and abstract concept to understand how neural networks actually work. For example, much of the data transformations done by a neural network involve complex topology and math that leading scientists still don't understand. Omega^3 overcomes this barrier by offering an intuitive learning experience, using modular blocks and a simple user interface to show all the aspects of how a neural network learns.

Omega^3 works by displaying all views of the neural network training process. The program starts off by asking the user for a dataset to train on, and afterwards, in real-time, incrementally displays the user all the hidden layer data, along with current predictions and test error. By providing all of these views, the user is able to take a closer look at all the inner machinery of neural networks.

In addition, all the blocks are movable, and the program automatically adjusts the neural network structure according to where the blocks are placed. From the experience, users can not only learn how neural networks train to learn new data, but also see limitations of what neural networks can do.

For example, if the user uses too many layers/nodes, they will probably find that their model overfits the data. If the user chooses not enough model complexity, the neural network may show signs of underfitting.

Overall, the option for the user to play around and modify the model as they wish gives them a diverse range of opportunities to discover fascinating and rarely-seen aspects of neural networks.

Technology

-Pybrain, a Python library for machine learning

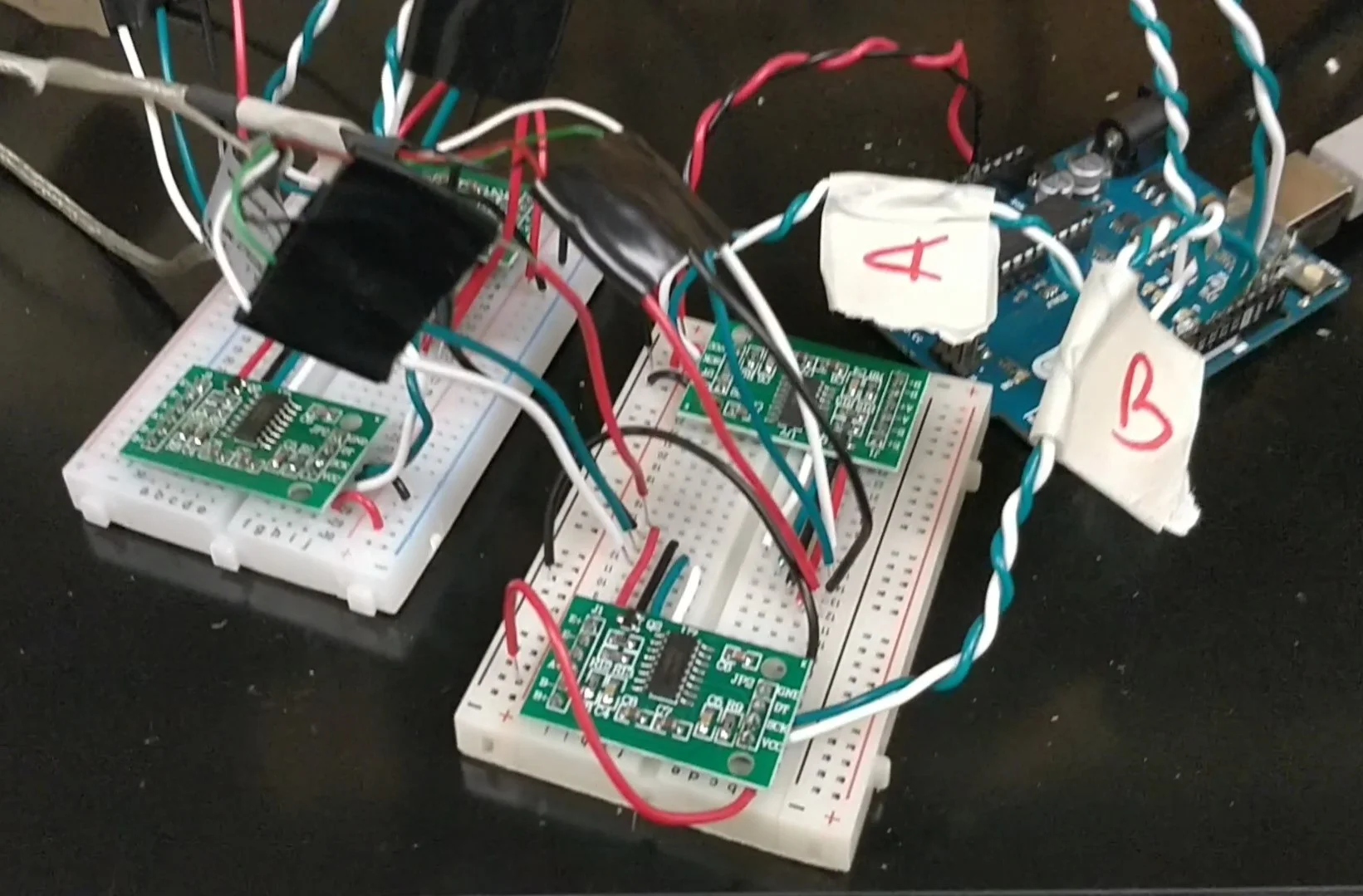

-Arduino Uno

-Raspberry Pi

-Matplotlib

-SolidWorks

-Laser cutter

-Pogo pins